Updated on December 5, 2023

Since the widespread integration of artificial intelligence (AI) into the technological landscape, a critical question has been constantly echoing in our minds: will AI become smarter and more competent than humans and take over their roles?

Countless debates and dystopian stories have been making many apprehensive about their future. With the growing adoption of ChatGPT, the AI revolution has gained remarkable momentum, surpassing the scenarios of science fiction movies, and making us contemplate for the first time this realization: things are truly getting serious.

Let’s take a closer look at how AI got this far and what its journey was until the present days.

What is artificial intelligence?

First of all, what is intelligence? Intelligence has been defined in many ways, for example:

- “The ability to learn, understand, and think about things.” (Longman Dictionary or Contemporary English, 2006)

- “The ability to solve problems and to attain goals.” (Yuval Harari)

- “The ability to acquire and apply knowledge and skills.” (Compact Oxford English Dictionary, 2006)

AI can handle repetitive, data-driven tasks and give data-based results. Human intelligence can work on creative, emotional and critically complex tasks.

Artificial intelligence (AI) involves the replication and enhancement of specific human cognitive processes. It is the field of computer science that focuses on creating systems capable of carrying out tasks that typically require human intelligence, such as learning, problem solving, or decision making.

It studies mental processes using computational models, aiming to mimic intelligent behavior through computational processes. This involves automating intelligent actions and responses and simulating human intelligence processes using computer systems.

These systems interpret data, learn from it, and use the knowledge to adapt to new inputs and perform tasks.

AI techniques

AI uses techniques such as machine learning, natural language processing, computer vision, robotics, among others, to create systems capable of tasks that mirror human intelligence. (Artificial Intelligence: A Modern Approach – S.J. Russel, P. Norvig, 1995)

- Machine learning – enables systems to improve their performance on a task through exposure to data.

- Natural language processing – facilitates the interaction between computers and human language.

- Computer vision – allows machines to interpret and understand visual information.

- Robotics – enables the creation of machines capable of performing tasks in the physical world.

These techniques work together to develop systems that can undertake complex activities such as problem solving, recognizing patterns in data, understanding and responding to human language, interpreting visual information, decision making, and performing physical tasks. These closely resemble the cognitive capabilities typically associated with human intelligence.

AI seeks to imitate aspects of human thinking using algorithms and machine learning. However, AI’s abilities are often constrained by predefined rules and lack the holistic understanding and creativity inherent in human intelligence. The goal of AI development is to complement human capabilities rather than completely replicate the complexity of human cognition.

History of AI

The modern concept of artificial intelligence (AI) began to take shape in the 1950s, but, before that, there had been some early ideas that could be considered precursors to the field. Though rudimentary, these early concepts sowed the seeds for the development of what would become the field of AI.

In mythology, the tale of the gigantic bronze automaton, Talos, created to protect Europa from pirates and invaders, and the tale of Pygmalion’s sculpture being brought to life are just glimpses into the ancient fascination with the idea of creating lifelike entities. But these were just tales, with no hard evidence.

Humanity’s ingenuity and inventive mastery in crafting self-operating machines have manifested since the creation of the earliest automata in ancient Greece around the 3rd century BC.

The steam-powered Flying Pigeon of Archytas, the automatic servant of Philon (a humanoid robot designed to pour and mix wine and water), and later the Jaquet-Droz automata, in the 18th century, exhibited intricate mechanical dolls, highlighting the persistence of this quest for mimicking human-like actions through mechanical innovations.

In the 17th century, visionary philosophers like Descartes and Leibniz explored the possibility of creating human-like artificial entities, their work contributing to the concept of the potential capabilities of machines to resemble living beings.

In the 19th and early 20th centuries, Babbage and Lovelace set the fundaments of modern computing, although not explicitly focused on AI. Similarly, in the first half of the 20th century, Wiener and Turing’s work set the stage for machine-simulated intelligent behavior.

However, it was truly in 1950 that the AI field began to emerge, with significant contributions attributed to the work of Turing and McCarthy.

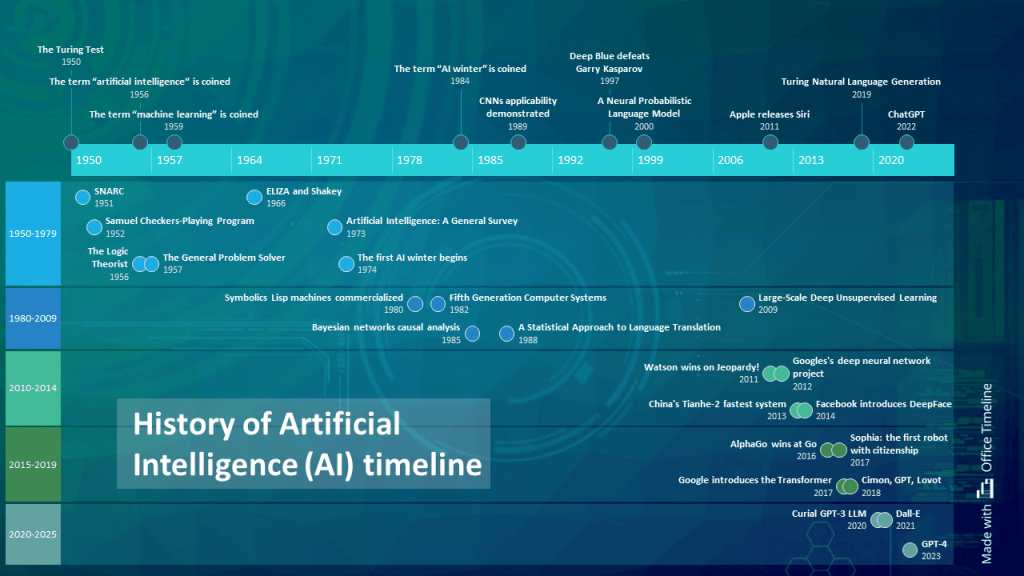

AI history: a timeline

To help you put things in perspective, we’ve created this visual timeline of the history of AI, that you can download for free as an image or PowerPoint file.

Here’s a high-level overview of major milestones recorded in the history of AI:

- 1950: The Turing Test

- 1951: SNARC

- 1952: Samuel Checkers-Playing Program

- 1956: The term “artificial intelligence” is coined

- 1956: The Logic Theorist

- 1957: The General Problem Solver

- 1959: The term “machine learning” is coined

- 1966: ELIZA and Shakey

- 1973: The report “Artificial Intelligence: A General Survey”

- 1974: The first AI winter begins

- 1980: Symbolics Lisp machines commercialized

- 1982: Fifth Generation Computer Systems

- 1984: The term “AI winter” is coined

- 1985: Bayesian networks causal analysis

- 1988: A Statistical Approach to Language Translation

- 1989: CNNs applicability demonstrated

- 1997: Deep Blue defeats Garry Kasparov

- 2000: A Neural Probabilistic Language Model

- 2009: Large-Scale Deep Unsupervised Learning Using Graphics Processors

- 2011: Watson defeats humans on Jeopardy!

- 2011: Apple releases Siri

- 2012: Breakthrough in image recognition – Google’s deep neural network project

- 2014: China’s Tianhe-2 – fastest system

- 2014: Facebook introduces DeepFace

- 2016: AlphaGo defeats the world champion in the Chinese board game Go

- 2017: Sophia, the first robot to be granted citizenship

- 2017: Google introduces the Transformer

- 2018: Cimon, GPT, Lovot

- 2019: Turing Natural Language Generation model

- 2020: Curial GPT-3 LLM

- 2021: Dall-E

- 2022: ChatGPT

- 2023: GPT-4

Major milestones in AI history

Let’s examine in more detail at how AI’s story has unfolded since 1950 until the present days.

1950

Alan Turing introduces the Turing Test. Initially named the imitation game by Alan Turing, the Turing test evaluates a machine’s capability to exhibit intelligent behavior similar to or indistinguishable from that of a human.

1951

The first artificial neural network (ANN), SNARC, was created by Marvin Minsky and Dean Edmonds, using 3000 vacuum tubes to emulate a network comprising 40 neurons.

1952

Arthur Samuel creates the Samuel Checkers-Playing Program, the first self-learning program designed to play games.

1956

The term “artificial intelligence” is coined, and the field is officially established during the Dartmouth Summer Research Project on Artificial Intelligence, considered the birth of this field of research.

1956

A. Newell, C. Shaw and H. A. Simon, working for the RAND Corporation, develop the Logic Theorist, recognized as the pioneering artificial intelligence program. This groundbreaking computer program was engineered for automated reasoning and successfully proved 38 theorems from Principia Mathematica (Whitehead and Russel) and discovered more efficient proofs for some of them.

1957

A. Newell, C. Shaw and H. A. Simon (RAND Corporation) develop the General Problem Solver (GPS-I), a computer program intended to work as a universal problem solver machine.

Frank Rosenblatt develops the perceptron, an early ANN that laid the foundation for modern neural networks.

John McCarthy creates Lisp, a programming language popular in the AI industry and among developers.

1959

Arthur Samuel popularizes the term “machine learning”, explaining that computers can be programmed to surpass their programmer.

Oliver Selfridge introduces Pandemonium: A Paradigm for Learning, advancing self-improving pattern recognition in machine learning.

1966

Joseph Weizenbaum creates ELIZA, an early NLP (natural language processing) computer program capable of engaging in conversations with humans. It is considered the first chatbot.

Stanford Research Institute builds Shakey, the first mobile intelligent robot, setting the groundwork for self-driving cars and drones.

1973

The report Artificial Intelligence: A General Survey by James Lighthill is published. The report was critical of the unrealistic expectations surrounding AI and its limited progress. It highlighted the challenges and failures within the field, leading to a loss of confidence and to a substantial reduction in British government support for AI research in UK.

1974

The first AI winter begins, marked by reduced funding and interest in AI due to a series of setbacks and unfulfilled promises.

1980

Symbolics Lisp machines entered the market, sparking an AI revival, but the market later collapsed.

1982

Japan launches its Fifth Generation Computer Systems project, aiming to create computers with advanced AI and logic programming capabilities.

1984

Marvin Minsky and Roger Schank coin the term “AI winter” at a meeting, expressing concerns about potential industry fallout. Their cautionary prediction about AI overhype materialized three years later.

1985

Judea Pearl develops Bayesian networks causal analysis, enabling computers to represent uncertainty using statistical techniques enabling the modeling of intricate relationships between variables and the generation of probabilistic inferences. This facilitates informed decision-making and predictive analysis.

1988

Peter Brown et al. publish A Statistical Approach to Language Translation, shaping a highly studied method in machine translation.

1989

Yann LeCun, Yoshua Bengio, and Patrick Haffner demonstrate the applicability of convolutional neural networks (CNNs) in recognizing handwritten characters, highlighting the practical use of neural networks in real-world applications.

1997

IBM’s Deep Blue defeats world chess champion Garry Kasparov in a six-game match, marking a significant milestone in the development of AI and computing power.

2000

University of Montreal researchers publish A Neural Probabilistic Language Model, proposing feedforward neural networks for language modeling.

2009

The paper Large-Scale Deep Unsupervised Learning Using Graphics Processors is published by Rajat Raina, Anand Madhavan, and Andrew Ng, suggesting the use of GPUs (graphics processing units) for training large-scale deep neural networks in an unsupervised learning setting.

This work demonstrates the potential for GPUs to significantly accelerate the training of complex models, leading to advancements in the field of deep learning and the widespread adoption of GPU computing in machine learning research and applications.

2011

IBM’s Watson defeats human contestants on the quiz show Jeopardy!, demonstrating remarkable progress in natural language processing, machine learning and AI’s potential to understand and respond to complex human queries.

Schmidhuber and team develops the first superhuman CNN, winning the German Traffic Sign Recognition competition.

Apple introduces Siri, a voice-powered personal assistant, enabling voice-based interactions and tasks.

2012

Google’s deep neural network project achieves a breakthrough in image recognition, demonstrating the potential of deep learning in various applications. This triggered the explosion of deep learning research and implementation.

2013

DeepMind shows impressive learning results using deep reinforcement learning to play video games, surpassing human expertise in games.

China’s Tianhe-2 supercomputer doubled the world’s supercomputing speed, gaining and retaining the title of world’s fastest system. Tianhe-2 was world’s fastest system between 2013 and 2015. China maintained its no. 1 position until 2017 with another supercomputer, Sunway TaihuLight.

2014

Ian Goodfellow and his team invent generative adversarial networks, a type of machine learning framework applied for photo generation, image transformation, and deepfake creation.

Facebook introduces DeepFace, an AI system capable of facial recognition that rivals human capabilities.

2016

AlphaGo, developed by DeepMind (a subsidiary of Google), defeats the world champion in the ancient Chinese board game Go, demonstrating the potential of AI in complex decision-making tasks.

2017

Sophia, a humanoid robot developed by Hanson Robotics, becomes the first robot to be granted citizenship by a country – Saudi Arabia (October 2017). In November 2017, Sophia became the United Nations Development Programme’s first Innovation Champion and the first non-human to hold a United Nations title.

In the paper Attention Is All You Need, Google researchers introduce a groundbreaking neural network architecture, the Transformer (a new simple network architecture, the Transformer, based solely on attention mechanisms), spurring research into text parsing for large language models (LLMs).

Physicist Stephen Hawking warned about AI’s potential catastrophic consequences without adequate preparation and prevention.

2018

IBM, Airbus and the German Aerospace Center DLR develop Cimon, an innovative, AI-powered space robot that can assist astronauts.

OpenAI’s GPT-1 (117 million model parameters) was introduced, laying the groundwork for future large language models (LLMs).

Groove X releases Lovot, a home mini-robot that could sense and affect human moods.

AI ethics and responsible AI become major topics of discussion, with organizations and governments focusing on creating guidelines and regulations for the ethical development and use of AI.

2019

Microsoft launched the 17-billion-parameter Turing Natural Language Generation model.

Google AI and Langone Medical Center’s deep learning algorithm outperformed radiologists in detecting lung cancers.

2020

University of Oxford develops Curial, an AI test for rapid COVID-19 detection in emergency rooms.

OpenAI releases GPT-3, an LLM with 175 billion parameters for humanlike text generation, marking a significant advancement in NLP.

2021

OpenAI introduces Dall-E, an AI that can generate images from text.

The University of California produced a pneumatic, four-legged soft robot.

2022

In November, OpenAI launches ChatGPT, offering a chat-based interface to its GPT-3.5 LLM. Just 5 days after launch, the application had already acquired over 1 million users.

2023

OpenAI introduces the GPT-4 multimodal LLM for text and image prompts.

Elon Musk, Steve Wozniak, and others call for a six-month pause in training more advanced AI systems.

ChatGPT history and timeline

ChatGPT marks a special chapter in the history of AI. ChatGPT, short for Chatbot GPT, is an advanced conversational AI model developed by OpenAI. It operates using deep learning techniques and the Generative Pre-trained Transformer (GPT) architecture, allowing it to simulate human-like text generation. It understands and replies to human language, enabling it to engage in conversations in and perform various text-based tasks.

GPT, or Generative Pre-trained Transformer, is an advanced model in natural language processing (NLP) that utilizes deep learning and neural networks to comprehend and generate human-like text.

The origins of natural language processing can be traced back to the 1950s and 1960s, where initial research centered around basic language processing algorithms and machine translation.

Although progress was modest, the 1980s witnessed a gradual increase in interest, thanks to improved computational models and access to more extensive datasets.

Since then, the progress in machine learning and deep learning, along with the abundance of text data, have pushed NLP forward. NLP supports Chatbot GPT’s capabilities, enabling it to comprehend and produce human-like text, facilitating natural interactions with users.

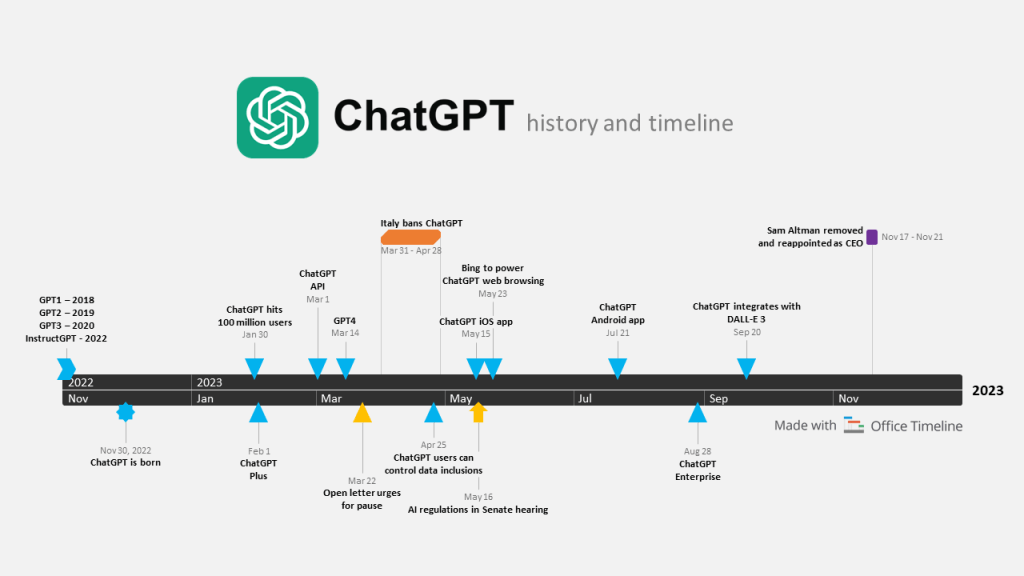

Chat GPT timeline

Here is a visual timeline of how ChatGPT has evolved from its early days to the present time.

In summary, here are the most important steps in ChatGPT’s history:

- December 11, 2015: OpenAI is created

- June 16, 2016: OpenAI publishes research on generative models

- February 21, 2018: Elon Musk steps down from OpenAI

- June 11, 2018: GPT-1 is launched

- February 14, 2019: GPT-2

- September 19, 2019: Fine-tuning GPT-2

- July 22, 2019: Microsoft announces partnership with OpenAI

- June 11, 2020: GPT-3

- January 27, 2022: InstructGPT

- November 30, 2022: ChatGPT is born

- February 01, 2023: ChatGPT Plus

- January 30, 2023: ChatGPT hits 100 million users

- March 01, 2023: ChatGPT API

- March 14, 2023: GPT-4

- March 22, 2003: Open letter urges for a pause

- March 31 – April 28, 2023: Italy bans ChatGPT

- April 25, 2023: ChatGPT users can control data inclusions

- May 15, 2023: ChatGPT iOS app

- May 16, 2023: Sam Altman speaks in Senate hearing about AI regulations

- May 23, 2023: Bing to power ChatGPT web browsing

- July 21, 2023: OpenAI launches the official ChatGPT app for Android

- August 28, 2023: OpenAI launches ChatGPT Enterprise

- September 20, 2023: ChatGPT integrates with DALL-E 3

- November 17-21, 2023: CEO Sam Altman is fired and reappointed

Chat GPT: key milestones explained

December 11, 2015

OpenAI was officially established on December 11, 2015, by tech leaders including Elon Musk and Sam Altman, concerned about the risks of advanced AI. The company acknowledged the inevitability of AI’s future and the promising business prospects it presented.

June 16, 2016

OpenAI published research on generative models, trained by collecting a vast amount of data in a specific domain, such as images, sentences, or sounds, and then teaching the model to generate similar data.

February 21, 2018

Elon Musk steps down from OpenAI due to conflicts of interest with his roles at SpaceX and Tesla.

June 11, 2018

GPT-1, the initial Generative Pre-trained Transformer language model, was launched by OpenAI on June 11, 2018, with 117 million parameters. It had the capacity to generate a logical and context-appropriate reply to a specific prompt.

February 14, 2019

GPT-2 (1.5 billion parameters) was unveiled on Valentine’s Day in 2019, capturing attention for its large size and strong language generation capabilities.

September 19, 2019

OpenAI published research on fine-tuning the GPT-2 language model with human preferences and feedback.

July 22, 2019

In 2019, Microsoft and OpenAI announced their partnership to collaborate on new AI technology. Microsoft contributed $1 billion to the development of AI tools and services. Using Microsoft’s expertise and resources, OpenAI advanced its GPT and ChatGPT projects.

June 11, 2020

OpenAI launched GPT-3, the largest, most powerful language model ever (175 billion parameters). This was a significant milestone in the field of natural language processing.

January 27, 2022

InstructGPT is better than GPT-3 at following English instructions. (OpenAI)

OpenAI published research on InstructGPT models that show improved instruction-following ability, reduced fabrication of facts, and decreased toxic output.

November 30, 2022

OpenAI introduces ChatGPT, that uses GPT-3.5 language technology. It comprehends numerous languages and can generate contextually relevant responses. However, its proficiency depends on the data it is trained on, indicating its finite capabilities.

With Microsoft integrating AI into Bing, it poses a challenge to Google’s search engine dominance, contributing to the buzz around ChatGPT in the tech world.

January 30, 2023

Just two months after launch, ChatGPT reportedly reached 100 million monthly active users, earning the title of the fastest-growing application in history. By comparison, it took TikTok nine months to achieve the same milestone.

February 01, 2023

OpenAI announced ChatGPT Plus, a premium subscription option for ChatGPT users offering less downtime and access to new features.

March 01, 2023

OpenAI launched the ChatGPT API, allowing developers to incorporate ChatGPT functionality into their applications. Early adopters: SnapChat’s My AI, Quizlet Q-Chat, Instacart, and Shop by Shopify.

March 14, 2023

OpenAI releases GPT-4, designed for producing safer and more useful responses. Its broader general knowledge and problem-solving capabilities help handling challenging problems with improved accuracy.

March 22, 2023

The Future of Life Institute publishes an open letter calling all AI labs to pause training of powerful AI systems for six months, citing risks to society and humanity. The open letter was signed by hundreds of the biggest names in academia, research and tech, including Elon Musk, Steve Wozniak, and Yuval Noah Harari.

March 31 – April 28, 2023

Italy restricts ChatGPT due to personal data collection concerns and a lack of age verification, citing potential for harmful content.

April 25, 2023

OpenAI introduced new ChatGPT data controls, giving users the option to choose which conversations are included in future GPT model training data.

May 15, 2023

OpenAI releases the ChatGPT iOS app, giving users free access to GPT-3.5. ChatGPT Plus users can switch between GPT-3.5 and GPT-4.

May 16, 2023

In a Senate hearing, OpenAI CEO Sam Altman talks about AI regulation without slowing innovation.

May 23, 2023

Microsoft announced that Bing would power the search engine for ChatGPT.

July 21, 2023

OpenAI launches the official ChatGPT app for Android, a release that comes with improvements on the security side among increased competition on the chatbot market.

August 28, 2023

OpenAI launches ChatGPT Enterprise, a business-tier version of its AI chatbot without usage caps.

September 20, 2023

OpenAI reveals DALL-E 3, an advanced version of its image generator, which is integrated into ChatGPT. This allows ChatGPT users to generate images through the chatbot.

November 17 – 21, 2023

The OpenAI board fires CEO Sam Altman despite public support from his staff, tries and fails to bring in another CEO, only to see Altman take back his position and the conflict is subdued – all in the span of just five days.

Why is ChatGPT important?

ChatGPT has significantly pushed the boundaries of what AI can achieve and sparked new applications across domains. We can confidently say that ChatGPT is a game changer in the history of AI, dividing it into the era before ChatGPT and the era after it.

This distinction is marked by an increase in public interest in AI, with ChatGPT’s easy accessibility that led to a more relaxed and friendly interaction with conversational AI. With its user-friendly interface and strong conversational abilities, ChatGPT has shortly gained huge popularity for its accessible approach.

Yet its release to a wider audience has raised concerns about its flaws, particularly over misinformation and ethical implications. Taking a more cautious approach on its extensive deployment might have been prudent, emphasizing the importance of implementing responsible AI practices.

All in all, ChatGPT is here to stay – for better or worse. It is up to us how we make the best use of its strengths and work around its limitations.

Conclusion

Despite the huge progress in the AI field, the scope of AI dominance remains limited. If we recognize the collaborative relationship between human and artificial intelligence, we acknowledge the role of AI as a complementary tool rather than a complete substitute.

It is with a cautious approach to implications that we should examine AI’s effects on consciousness and the speculative idea of eventual sentience. While fictional warnings of AI rebellion remain mere speculations, the advancements in AI technology represent a notable leap forward.

This progress calls for a prudent approach, as the ethical consequences of technology impacting conscious lives call on highlighting the significance of responsible decision-making at all levels.

Amidst this changing landscape marked by technological evolution, an important necessity surfaces as we contemplate our humanity: we are urged to reconsider and reaffirm the essence of our collective humanity. This self-reflection prompts us to rediscover overlooked aspects of our emotional existence, encouraging a renewed understanding of our shared human experience.

FAQs about artificial intelligence and ChatGPT

Take a look at the commonly asked questions about AI and ChatGPT:

Artificial intelligence (AI) refers to the simulation of human intelligence processes by machines. Examples of AI include virtual assistants like Siri and Alexa, recommendation systems in streaming services, computer vision in facial recognition, robotics in automated manufacturing, and autonomous vehicles like self-driving cars

AI has been developed by a community of researchers and scientists over time. While the concept of AI has historical roots, the term “artificial intelligence” was coined in 1956 by John McCarthy and his colleagues at the Dartmouth Conference. Notable contributors to the field include Alan Turing, Arthur Samuel, Frank Rosenblatt, Geoffrey Hinton. Prominent figures such as Yann LeCun, Yoshua Bengio, and Andrew Ng have also contributed significantly to the advancement of machine learning and AI research.

GPT stands for “Generative Pre-trained Transformer,” which refers to a deep learning model developed by OpenAI. The term specifically refers to a family of language processing models that includes GPT-3, (the third iteration of the Generative Pre-trained Transformer). GPT-3 is a language processing AI model known for its advanced language processing capabilities and its ability to generate human-like text based on the provided input. It has been widely used in various applications, such as natural language processing, text generation, and language translation.

Yes, ChatGPT is a form of artificial intelligence. It belongs to the family of the Generative Pre-trained Transformer (GPT) models developed by OpenAI. ChatGPT, like other GPT models, uses deep learning techniques to process and generate human-like text based on the input it receives. It can understand and respond to a wide range of queries, engage in conversations, and provide information and assistance on various topics.

AI’s potential benefits in various sectors are significant, but there are some associated risks that have been acknowledged: job displacement, ethical concerns arising from biases, security threats such as AI-generated cyber-attacks, the ethical challenges of autonomous weapons, and the possibility of unintended consequences. However, efforts in responsible AI development and regulation can help reduce these risks and ensure AI’s safe integration into society.

Yes, Google has developed AI, which is integrated into various products and services, including Google Search, Google Photos, and Google Assistant. They also have specific AI hardware like the Tensor Processing Unit (TPU). DeepMind, a significant AI research lab acquired by Google, has made significant progress in deep learning and reinforcement learning (AlphaGo and AlphaFold). Google’s AI projects extend to healthcare, autonomous driving, natural language processing, and robotics, among others.

The impact of AI is dual: it has potential benefits such as increased efficiency and progress in a huge number and variety of fields, but it also has potential drawbacks, such as job displacement and ethical concerns. Responsible development and regulation can maximize benefits and reduce risks.

No, AI is not 100% accurate. It depends on data quality, complexity, and algorithms. Biases can affect results. Human supervision is very important in critical tasks.

The concept of True AI refers to artificial general intelligence (AGI), which would possess human-like cognitive abilities – that is, it can learn to perform any intellectual task that human beings can perform and may surpass humans in most economically valuable tasks. True AI is theoretically possible but remains a significant challenge. Current AI is specialized and not equivalent to human cognition. AGI remains an ambitious goal, the scientific community has not yet achieved it. Achieving AGI requires substantial progress in both hardware and software, with many technical and ethical challenges to address.

About the timelines of AI and ChatGPT

We hope that our timelines have offered some interesting insights and a clearer view of the events surrounding the development of artificial intelligence and the rise of ChatGPT.

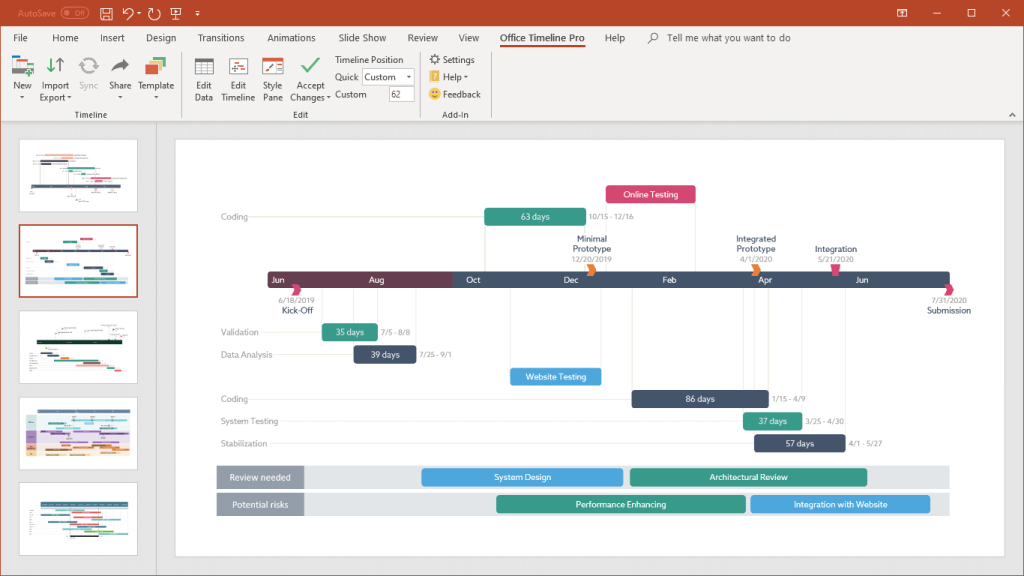

The timelines were created with the help of the Office Timeline PowerPoint add-in, a user-friendly tool that can help you quickly convert complex data into appealing PowerPoint chronologies.

Download for free these timelines and update or customize them using the Office Timeline 14-day free trial, which provides extra functionalities for even more refined results.

Turn project data into professional timelines

Get the advanced features of Office Timeline Pro+ free for 14 days.

Get free trial